The OMol25/UMA Release

the benchmarks; how to run the models; what it means for chemistry and Rowan

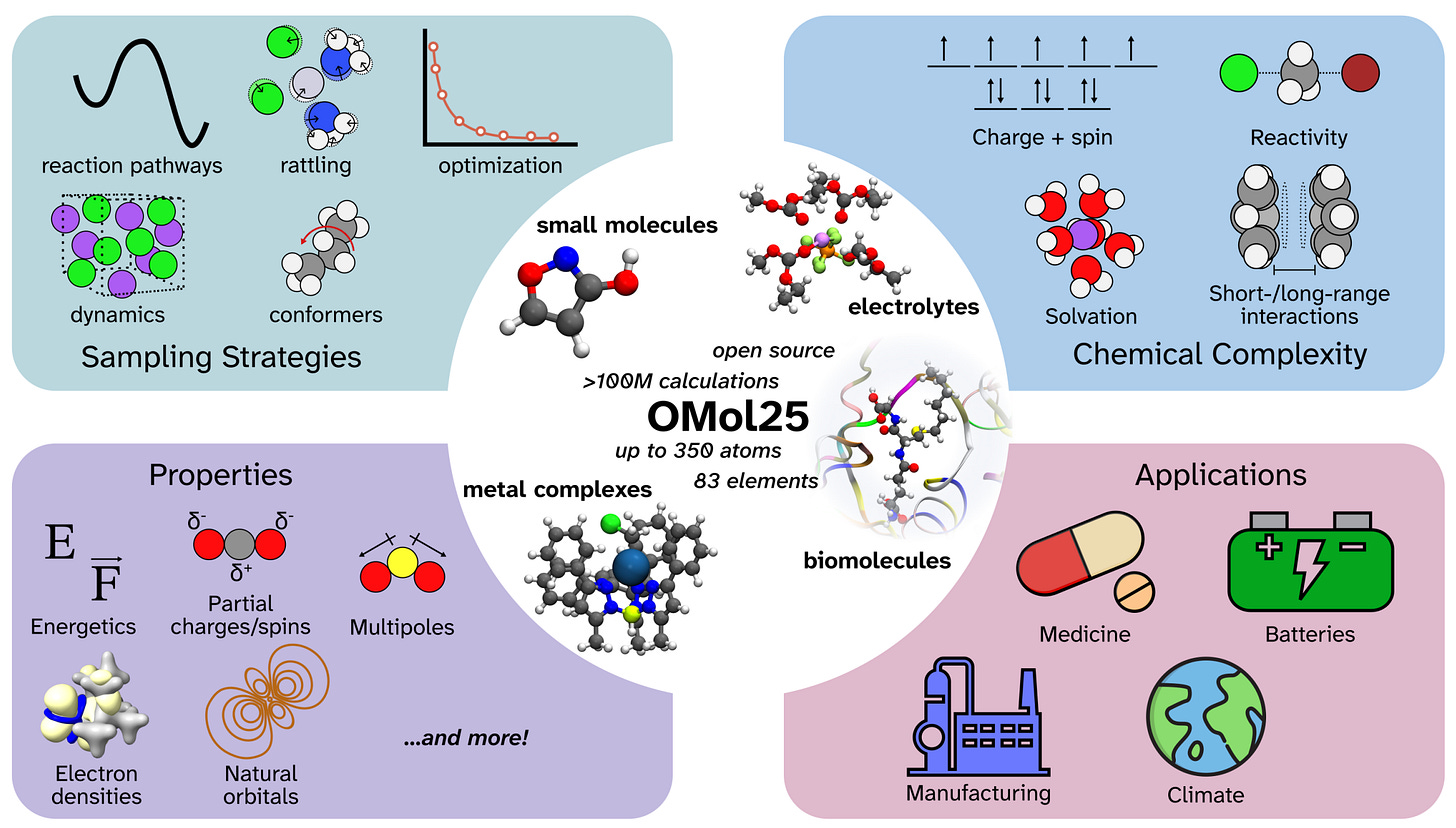

Last week, Meta’s Fundamental AI Research (FAIR) team released Open Molecules 2025 (OMol25), a massive dataset of high-accuracy computational chemistry calculations. In addition to the dataset, they released several pre-trained neural network potentials (NNPs) trained on the dataset for use in molecular modeling, including a new “Universal Model for Atoms” (UMA) that unifies OMol25 with other datasets from the FAIR-chem team.

We think a lot about NNPs here at Rowan, as existing users will know, so we were pretty well-positioned to appreciate the impact of this work—we listed the models on Rowan within 24 hours, and started running them through our benchmarks right away. Now that the dust has settled a bit, here’s what we’ve learned:

Benchmarking the Models

The Meta models trained on OMol25 perform very well on all the benchmarks we’ve run to date. They’re basically as good as the best range-separated hybrid density functionals with a big basis set, and achieve virtually perfect results on conformer datasets like Folmsbee and Wiggle150:

We plan to run many more benchmarks in the future, including optimization, frequencies, and periodic systems, but the early results are consistent with this being an “AlphaFold moment” for NNPs (as one Rowan user put it).

Running the Models on Rowan

We’ve added the small eSEN model trained on OMol25 to Rowan. (This is the only eSEN-based model trained with conservative forces, which generally leads to more stable optimizations and MD trajectories.) You can select this model from the “Level of Theory” dropdown when submitting a calculation:

We plan to add more models in the weeks to come; some of the larger models are not yet available through HuggingFace, so we can’t benchmark or list them yet.

Understanding the Release

Plenty of people have reached out asking us what we think of the OMol25 release, what this means for the field moving forward, and what the strengths and limitations of these models are. We wrote a long-form piece with our thoughts, which you can read here. (Spoilers: we still need better solutions for charge, spin, and solvent effects—and this dataset is going to be pretty expensive to train on for academics.)

If you have responses to any of our points, please let us know!

Perhaps a comparison should be done with transition metals sets (like MOBH35) and barrier height benchmark(like BH9)?